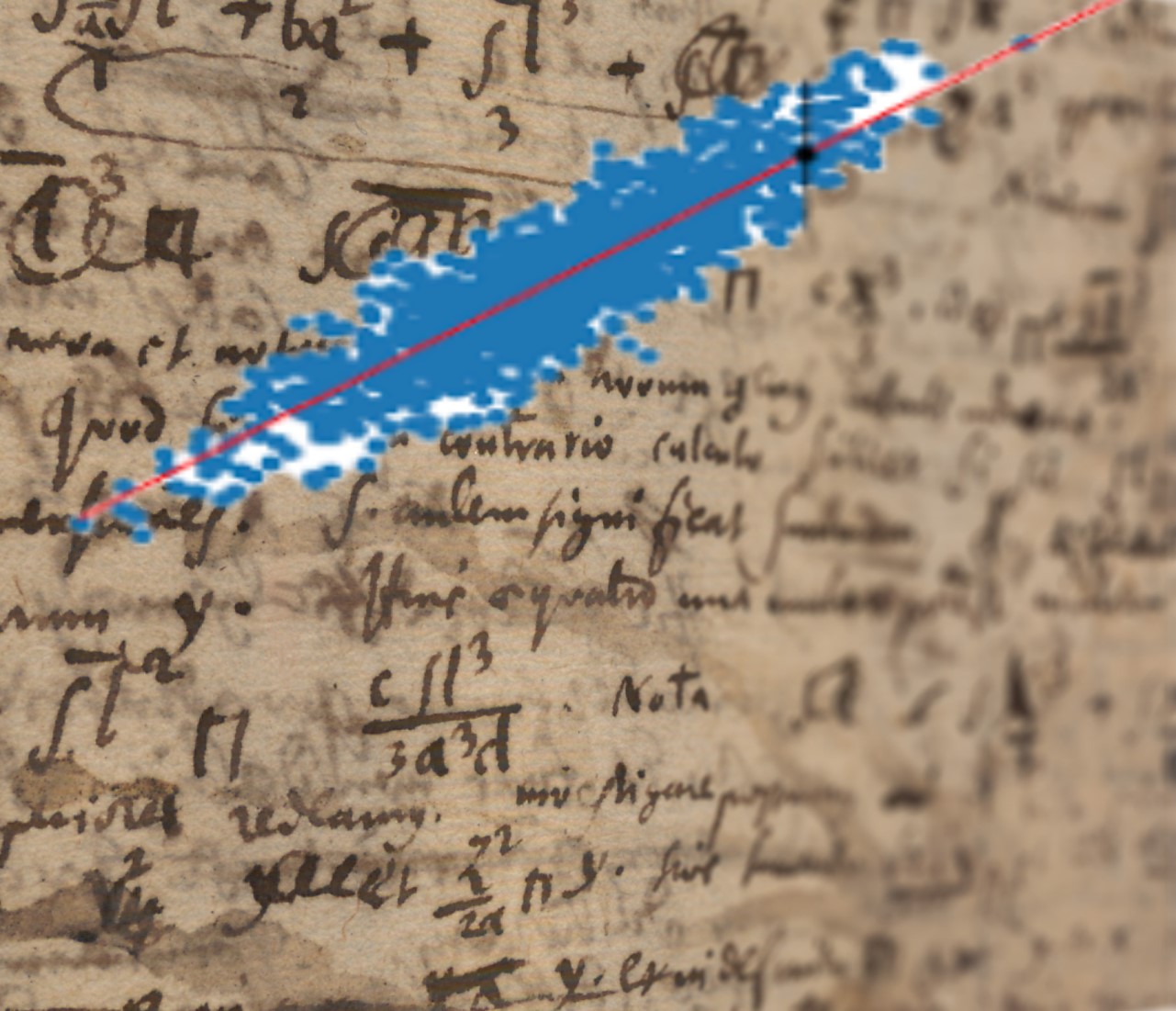

The elements of big data analytics has roots in statistics, knowledge management, and computer science. Many of the data mining terms below appear in these disciplines but may have different connotation or specialized meaning when applied to our problems. The problems of massive parallel processing and the specialized algorithms employed to perform analysis in a… Continue reading Short dictionary of machine learning

Simple runbook template

We use a runbook template to document what processes occur automatically or on demand. Typically these events are either date-triggered or event-triggered. An example of a date-triggered process might be a scheduled email sent nightly at midnight. An example of an event-triggered process might be to send an email when any disk on a server… Continue reading Simple runbook template

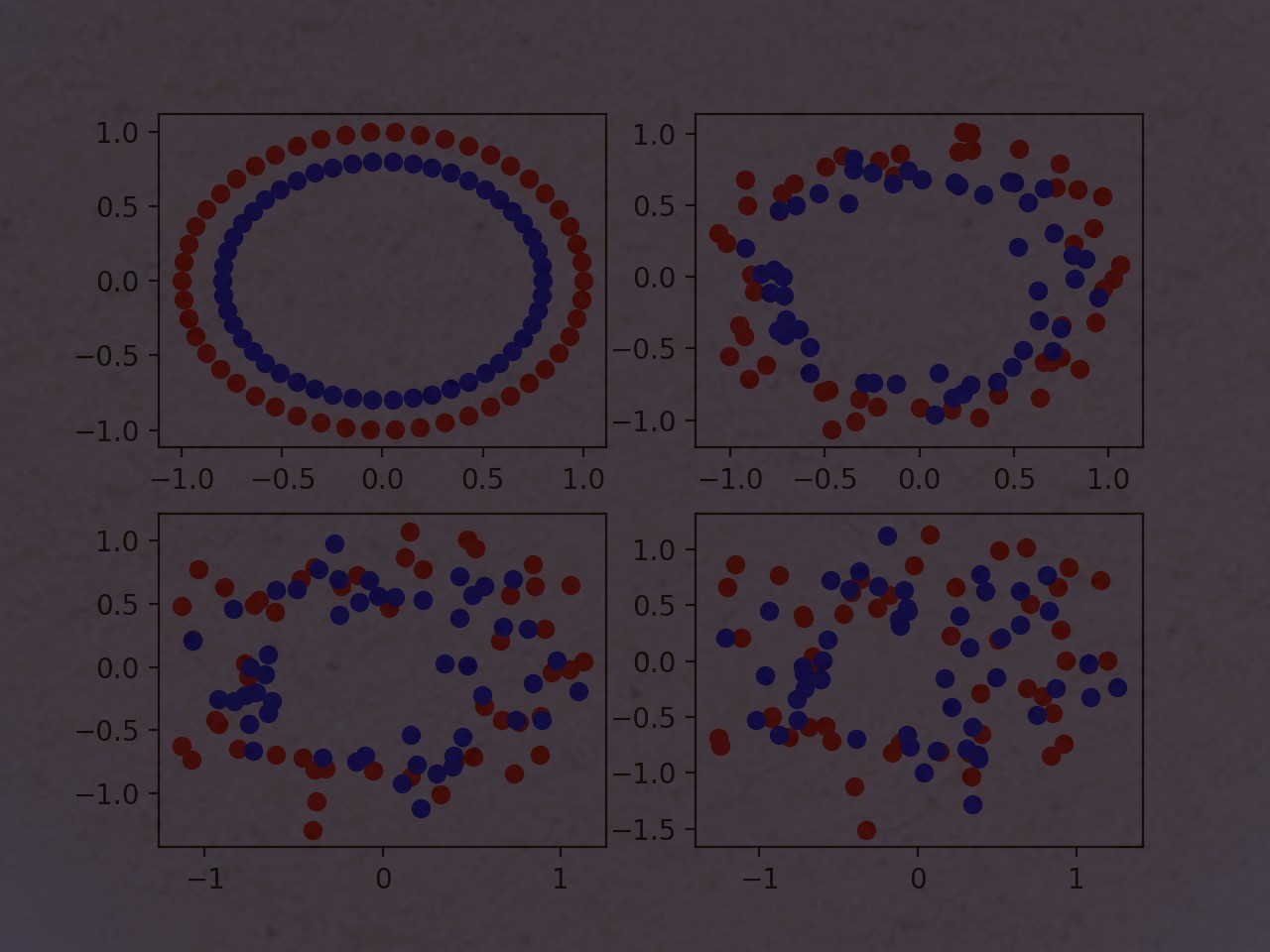

Free machine learning datasets

Below are many downloadable free machine learning datasets. They cover click data, air traffic control data, surveys, temporal datasets of various types, crime data, employee pay data, map data, law data, and many other types. I am a huge fan of SSPS, scikit-learn, opennlp, and other mainstream libraries but for quick analysis and visualization don’t… Continue reading Free machine learning datasets