Planning and Communicating Your Cluster Design When creating a new Amazon Web Services (AWS) hadoop cluster it is overwhelming for most people to put together a configuration plan or topology. Below is a Hadoop reference architecture template I’ve built that can be filled in that addresses the key aspects of planning, building, configuring, and communicating… Continue reading Fillable Hadoop reference architecture template for AWS clusters

Tag: big data

Double your effective IO on AWS EBS-backed volumes

NOTE: This content is for archive purposes only. With generation 4+ EBS volumes big data IO performance no longer requires volume prewarming. Fresh Elastic Block Storage volumes have first-write overhead At my employer I architect Big Data hybrid cloud platforms for global audience that have to be FAST. In our cluster provisioning I find we frequently… Continue reading Double your effective IO on AWS EBS-backed volumes

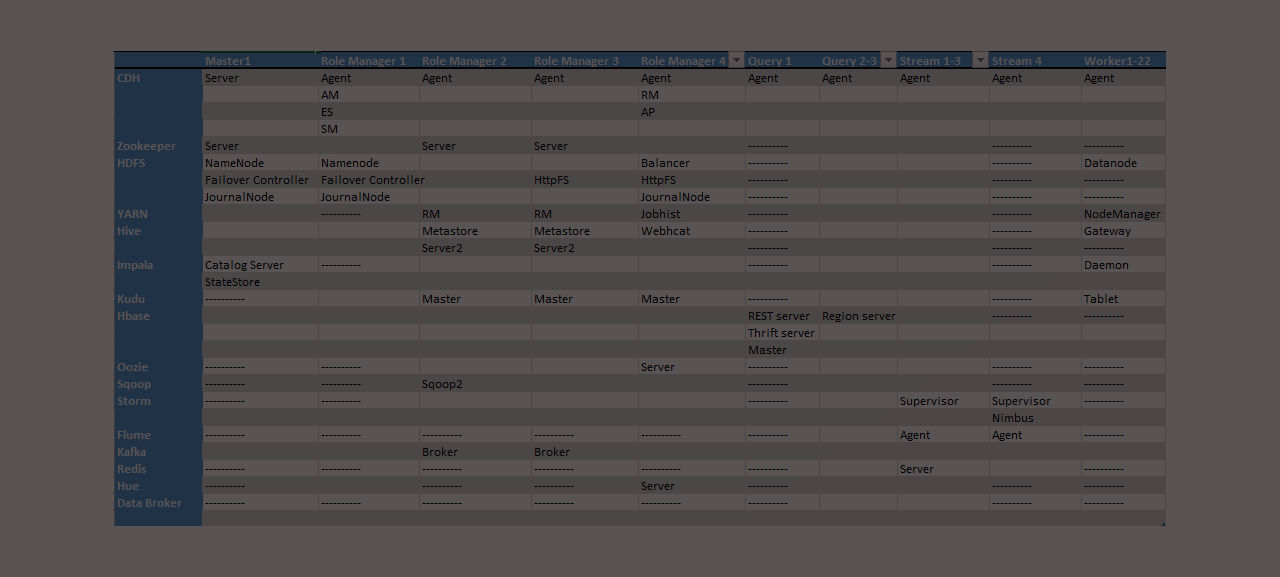

Example Big Data dev cluster topology

Below is an example dev cluster topology for a Big Data development cluster as I’ve actually used for some customers. It’s composed of 6 Amazon Web Service (AWS) servers, each with a particular purpose. We have been able to perform full lambda using this topology along with Teiid (for data abstraction) on terabytes of data.… Continue reading Example Big Data dev cluster topology